|

CUV

0.9.201304091348

|

|

CUV

0.9.201304091348

|

|

Modules | |

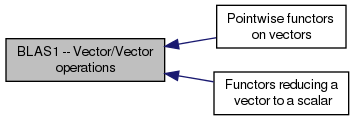

| Pointwise functors on vectors | |

| Functors reducing a vector to a scalar | |

Functions | |

| template<class __value_type , class __memory_space_type , class S > | |

| void | cuv::rprop (tensor< __value_type, __memory_space_type > &W, tensor< __value_type, __memory_space_type > &dW, tensor< S, __memory_space_type > &dW_old, tensor< __value_type, __memory_space_type > &rate, const float &decay=0.0f, const float &sparsedecay=0.0f) |

| Does a gradient descent step using the "RPROP" algorithm. | |

| template<class __value_type , class __memory_space_type , class S > | |

| void | cuv::rprop (tensor< __value_type, __memory_space_type, column_major > &W, tensor< __value_type, __memory_space_type, column_major > &dW, tensor< S, __memory_space_type, column_major > &dW_old, tensor< __value_type, __memory_space_type, column_major > &rate, const float &decay=0.0f, const float &sparsedecay=0.0f) |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. casting column major to row major since working on linear memory anyway. | |

| template<class __value_type , class __memory_space_type > | |

| void | cuv::learn_step_weight_decay (tensor< __value_type, __memory_space_type > &W, const tensor< __value_type, __memory_space_type > &dW, const float &learnrate, const float &decay=0.0f, const float &sparsedecay=0.0f) |

| Do a step of gradient descent with optional weight decay. | |

| template<class V , class M > | |

| void | cuv::learn_step_weight_decay_momentum (tensor< V, M > &W, tensor< V, M > &momentum, const tensor< V, M > &dW, const float &learnrate, const float &momentum_weight=0.9, const float &decay=0.0f, const float &sparsedecay=0.0f) |

| Same as learn_step_weight_decay, but with momentum. | |

| template<class __value_type , class __memory_space_type > | |

| void | cuv::learn_step_weight_decay (tensor< __value_type, __memory_space_type, column_major > &W, const tensor< __value_type, __memory_space_type, column_major > &dW, const float &learnrate, const float &decay=0.0f, const float &sparsedecay=0.0f) |

| This is an overloaded member function, provided for convenience. It differs from the above function only in what argument(s) it accepts. casting column major to row major since working on linear memory anyway. | |

| void cuv::learn_step_weight_decay | ( | tensor< __value_type, __memory_space_type > & | W, |

| const tensor< __value_type, __memory_space_type > & | dW, | ||

| const float & | learnrate, | ||

| const float & | decay = 0.0f, |

||

| const float & | sparsedecay = 0.0f |

||

| ) |

Do a step of gradient descent with optional weight decay.

| W | Destination matrix |

| dW | Direction of gradient descent. Vector of same size as W. |

| learnrate | Scalar learnreate |

| decay | Scalar L2 weight decay (cost) parameter |

| sparsedecay | Scalar L1 weight decay (cost) parameter |

Calculates W = (1-decay*learnrate) * W + learnrate * dW

| void cuv::learn_step_weight_decay_momentum | ( | tensor< V, M > & | W, |

| tensor< V, M > & | momentum, | ||

| const tensor< V, M > & | dW, | ||

| const float & | learnrate, | ||

| const float & | momentum_weight = 0.9, |

||

| const float & | decay = 0.0f, |

||

| const float & | sparsedecay = 0.0f |

||

| ) |

Same as learn_step_weight_decay, but with momentum.

| W | Destination matrix |

| momentum | The accumulated momentum (IN and OUT) |

| dW | Direction of gradient descent. Vector of same size as W. |

| learnrate | Scalar learnreate |

| momentum_weight | how strong to rely on accumulated momentum |

| decay | Scalar L2 weight decay (cost) parameter |

| sparsedecay | Scalar L1 weight decay (cost) parameter |

| void cuv::rprop | ( | tensor< __value_type, __memory_space_type > & | W, |

| tensor< __value_type, __memory_space_type > & | dW, | ||

| tensor< S, __memory_space_type > & | dW_old, | ||

| tensor< __value_type, __memory_space_type > & | rate, | ||

| const float & | decay = 0.0f, |

||

| const float & | sparsedecay = 0.0f |

||

| ) |

Does a gradient descent step using the "RPROP" algorithm.

| W | Destination tensor |

| dW | Direction of gradient descent. Vector of same size as W. |

| dW_old | Direction of gradient descent in privious step. Vector of same size as W. |

| rate | Vector of same size as W containing separate learnrates for each entry. |

| decay | Scalar L2 weight decay (cost) parameter |

| sparsedecay | Scalar L1 weight decay (cost) parameter Updates W according to the "RPROP" algorithm.

Calculates W = (1-decay*rate)*W + rate * W

where all multiplications are pointwise.

Also rate and dW_old are updated at each step. |

1.8.1.2

1.8.1.2